Market Landscape, Applications, and Opportunities for Small Research Teams

Overview

The biotech and pharma sectors stand at a critical juncture, pressured by the rapid advancement and adoption of Artificial Intelligence (AI). Moving beyond traditional predictive modeling and data analysis, a new paradigm of AI agents, also known as agentic AI, is emerging as of early 2025. These agents represent a leap towards autonomous systems capable of complex decision-making, planning, and task execution across the entire biopharma value chain, from initial drug discovery and preclinical research through clinical trial optimization, manufacturing, and regulatory processes.1 Unlike earlier AI iterations that primarily responded to prompts or followed preset rules, AI agents can pursue high-level goals, break them down into manageable steps, utilize external tools and data sources, and adapt their strategies based on real-time feedback, often with minimal human intervention.3

These developments hold a ton of promise for addressing the long-standing challenges of drug development: high costs, lengthy timelines, and high failure rates.1 By automating complex workflows, analyzing vast and diverse datasets (including genomic, proteomic, clinical, and literature data), generating novel hypotheses, and even assisting in experimental design and execution, AI agents can significantly enhance R&D productivity and potentially increase the probability of success.1 The market reflects this potential, with projections indicating substantial growth for AI in drug discovery and the broader biopharma sector, reaching tens of billions of dollars within the next decade, fueled by significant venture capital investment and strategic partnerships.12

The competitive landscape features a mix of established players and dynamic challengers. Dominant forces include AI-native biotechnology companies like Recursion Pharmaceuticals (now including Exscientia) and Insilico Medicine, which have built end-to-end platforms integrating AI with wet-lab capabilities; computational science leaders like Schrödinger, which are increasingly incorporating AI into their physics-based platforms; and specialized providers like Causaly, focusing on knowledge discovery and agentic research assistance.12 Large technology companies such as Google (via DeepMind, Isomorphic Labs, and Google Cloud) and NVIDIA are also playing crucial roles by providing foundational models, powerful computing infrastructure, and specialized frameworks like BioNeMo.16 Emerging challengers include numerous startups focusing on niche applications, novel AI techniques, or specific therapeutic areas, alongside Contract Research Organizations (CROs) integrating AI into their service offerings.27

However, despite the technological advancements and market enthusiasm, significant hurdles remain, particularly for small biotech research teams. These teams often operate under severe resource constraints, including limited budgets, lack of access to high-performance computing infrastructure, and a shortage of specialized AI and data science talent.32 Furthermore, they grapple with complex R&D workflows, data silos, poor data quality, and challenges in integrating disparate software tools like Electronic Lab Notebooks (ELNs) and Laboratory Information Management Systems (LIMS).33 The high cost and complexity of many existing AI platforms, often designed with large pharmaceutical companies in mind, create significant adoption barriers for these smaller organizations.35

This analysis reveals a critical unmet need: a cohesive, user-friendly, and cost-effective AI agents platform specifically tailored to the workflows, resource limitations, and data challenges of small biotech research teams. Such a platform would need to prioritize ease of use, seamless integration with common lab software and data sources, robust data management capabilities, and provide access to pre-built, validated agentic workflows for tasks like automated literature review, hypothesis generation, and experimental design assistance. Addressing this gap presents a significant market opportunity to democratize access to advanced AI capabilities and empower smaller innovators driving a substantial portion of the biopharma pipeline.

Understanding AI Agents in the Life Sciences Context

Defining Agentic AI: Beyond Traditional AI/ML

Within the biotech and pharma landscape of early 2025, the term “AI agents” or “agentic AI” signifies a distinct evolution beyond traditional Artificial Intelligence (AI) and Machine Learning (ML) approaches.1 While conventional AI/ML often excels at specific, narrowly defined tasks like pattern recognition from large datasets 43, making predictions based on learned correlations 45, or engaging in conversational interactions via chatbots 47, agentic AI introduces a higher degree of autonomy and goal-directed behavior.3

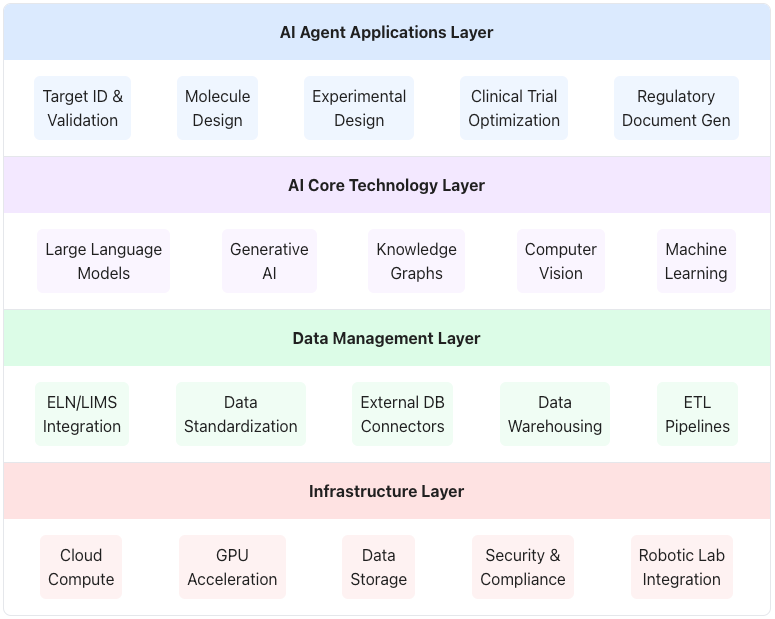

The AI agent technology stack in biotech consists of multiple layers, from infrastructure to applications. Each layer contains specialized technologies and capabilities that enable the development and deployment of AI agents for specific biotech R&D tasks.

Figure 1: Layered Technology Stack Showing How AI Agents Are Deployed in Biotech from Infrastructure to Applications

Agentic AI systems are designed to receive high-level objectives—such as “identify potential drug candidates for disease X” or “optimize the protocol for clinical trial Y”—and autonomously plan, orchestrate, and execute the necessary steps to achieve these goals with minimal ongoing human supervision.4 This contrasts sharply with traditional AI, which typically requires explicit instructions for each step or operates within predefined rules.3 AI agents possess the capability to break down complex objectives into smaller, manageable sub-tasks, make decisions in real-time, and dynamically adjust their strategies based on incoming data, environmental feedback, or intermediate results.1 This adaptive nature makes them particularly suited for the complex and often unpredictable workflows inherent in drug discovery and development. The underlying technology often builds upon powerful foundation models, like Large Language Models (LLMs), but critically augments them with capabilities for reasoning, planning, interaction with the environment (including digital tools and data sources), and memory access.6 Alternative nomenclature, such as Large Action Models (LAMs) or Large Agent Models, also exists but points to the same core concept of AI systems that can act autonomously to achieve goals.47

The fundamental difference lies in the transition from AI primarily serving as a predictive or analytical tool to AI functioning as an autonomous actor or collaborative partner within the research process.3 These agents are not just processing information; they are designed to execute tasks, interact with systems, and drive processes forward.5 This shift necessitates more than just powerful algorithms; it requires robust platform architectures that support planning, tool integration, memory, and potentially multi-agent coordination.6

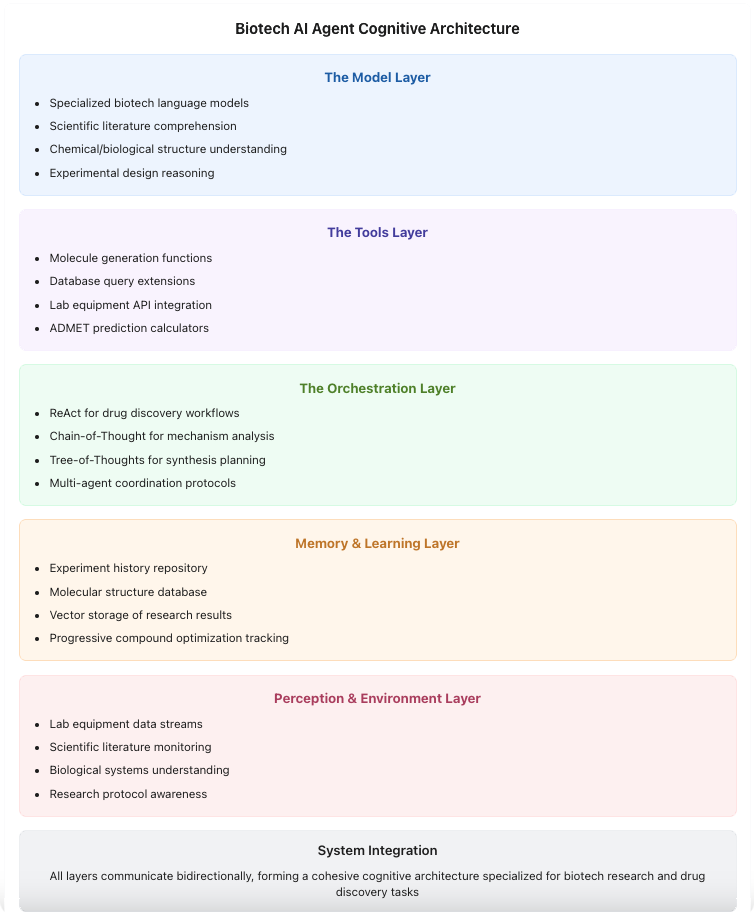

Another way that you can visualize how AI agents are used in biotech is by looking at the “Biotech AI Agent Cognitive Architecture, which represents how information is processed by different components in the AI agent environment.

Figure 2: Cognitive Architecture of Biotech AI Agents Showing Core Capabilities and Relationships

Core Capabilities: Autonomy, Planning, Tool Use, and Learning

As of early 2025, AI agents operating in the biotech and pharma domain are characterized by several core capabilities that distinguish them from previous AI systems:

- Autonomy: The defining feature is the ability to operate with significant independence.3 Agents can receive a high-level directive and work towards it without requiring step-by-step human guidance.4 They monitor their progress, interpret incoming data (potentially from multiple modalities like text, images, omics data 6), and make real-time decisions to adjust their approach as needed.1 This autonomy enables them to handle complex, multi-step processes in dynamic environments.4

- Planning & Goal Orientation: AI agents are inherently goal-directed.5 They possess planning capabilities that allow them to decompose a complex objective into a sequence of smaller, actionable sub-tasks.3 This involves reasoning about the steps needed, the tools required for each step, and the order of execution.4 For example, an agent tasked with regulatory document generation might plan to first retrieve data, then draft sections using templates, then validate against guidelines.4

- Tool Use: A critical capability enabling agents to execute tasks in the real world is their ability to interact with and utilize various external tools and systems.5 This goes beyond internal model computations and includes calling APIs to access databases (e.g., PubMed, FDA databases, ChEMBL) 4, browsing the web for information 5, querying internal company systems (like ELNs, LIMS, CRMs) 4, sending emails, writing code, or even controlling physical systems like laboratory robots.5 This interaction allows agents to gather necessary information, perform actions, and integrate with existing research infrastructure.

- Learning & Adaptation: Effective agents are designed to learn and adapt over time.3 This can involve learning from the outcomes of their actions, incorporating new data to refine models, or utilizing memory mechanisms.6 Retrieval mechanisms and databases can provide short-term memory for context during a task and long-term memory for accumulating knowledge and improving performance based on past experiences.6 Some agents are explicitly categorized as “Learning Agents”.3

Furthermore, the concept extends to Multi-Agent Systems (MAS), where multiple specialized AI agents collaborate (or sometimes compete) to tackle highly complex problems.3 Examples include managing intricate pharmaceutical supply chains through coordinated logistics, inventory control, and demand forecasting 3, or envisioned “AI scientists” composed of collaborative agents integrating different AI models, biomedical tools, and experimental platforms.49 The emergence of multi-agent neurosymbolic AI applications capable of machine-to-machine collaboration was anticipated for 2025.54 This capability for tool use and potential multi-agent orchestration signifies that designing effective agentic platforms requires sophisticated frameworks beyond just model hosting, encompassing planning engines, secure tool integration interfaces, agent communication protocols, and robust memory systems.

The Significance of AI Agents for Biotech/Pharma R&D Productivity

The emergence of AI agents holds profound significance for the biotechnology and pharmaceutical industries, primarily due to their potential to fundamentally address persistent challenges in R&D productivity. The traditional drug discovery and development process is notoriously slow, exceptionally expensive, and plagued by high failure rates, with estimates suggesting costs exceeding $2 billion per approved drug and timelines often spanning over a decade.1 The pressure to improve efficiency is further amplified by factors like the looming patent cliff, which threatens significant revenue streams for major pharmaceutical companies.7

AI agents offer a potential solution by automating complex, data-intensive, and often repetitive tasks that consume significant researcher time and resources.2 This includes activities like sifting through vast amounts of scientific literature and patents 43, analyzing complex datasets from genomics, proteomics, and clinical trials 1, managing multi-step experimental workflows 48, generating regulatory documents 2, and optimizing clinical trial logistics.2 By handling these tasks autonomously, AI agents can free up human scientists to focus on higher-value activities such as strategic decision-making, complex problem-solving, interpreting nuanced results, and generating novel scientific hypotheses.2

The potential impact extends across multiple dimensions:

- Accelerated Timelines: By automating tasks and processing data much faster than humans, AI agents can significantly shorten various stages of the R&D lifecycle, from target identification to clinical trial reporting.1 Some estimates suggest AI could reduce drug development time by years.60

- Reduced Costs: Automation and increased efficiency can lead to substantial cost savings by reducing manual labor, minimizing trial-and-error experimentation, optimizing resource allocation, and potentially lowering clinical trial costs.1

- Improved Precision and Success Rates: AI agents can analyze data with greater precision, identify subtle patterns missed by humans, optimize drug candidate properties, and potentially predict clinical outcomes more accurately, thereby increasing the probability of success and reducing late-stage failures.1 AI-discovered drugs in Phase 1 trials have shown potentially higher success rates compared to traditional methods.64

This potential for transformative change suggests that AI agents are not merely tools for incremental improvement but catalysts for a paradigm shift towards a more industrialized, data-driven, and efficient model of drug discovery and development.7 The key lies in their ability to orchestrate entire workflows involving multiple steps, tools, and data sources, moving beyond single-task automation.2 Realizing this potential, however, necessitates a re-evaluation of existing R&D processes and organizational structures to effectively integrate and collaborate with these autonomous systems, breaking down traditional silos.36

Applications of AI Agents Across the Biotech/Pharma Value Chain (as of April 2025)

AI agents are demonstrating applicability across the entire biopharmaceutical value chain, moving beyond theoretical potential to practical implementation in various stages. Their ability to autonomously plan, execute, and learn is driving innovation and efficiency from early research to post-market activities.

New Territory for AI Agents in Drug Discovery and Preclinical Research

This is arguably the area where AI agents are having the most profound impact, tackling the core challenges of identifying and validating effective drug candidates.

- Target Identification & Validation: AI agents excel at navigating the vast and complex biological data landscape to pinpoint promising drug targets. They can autonomously ingest and analyze diverse datasets, including genomics, proteomics, transcriptomics, clinical trial data, scientific literature (PubMed), and patent databases.1 By identifying patterns, correlations, and causal relationships often missed by human researchers, agents can propose novel targets or validate existing ones with greater speed and confidence.49 Platforms like Causaly’s Discover exemplify this, using agentic AI to synthesize information from internal and external sources, including knowledge graphs like the Human Protein Atlas, to generate comprehensive reports and answer complex research questions autonomously.8 Some platforms, like Recursion’s OS, employ knowledge graphs to evaluate signals and perform target deconvolution, assessing factors like protein structure, competitive landscape, and clinical trial data.69 This capability significantly accelerates the initial stages of drug discovery.

- Molecule Design & Optimization (Generative Chemistry): Leveraging generative AI, particularly techniques like Generative Adversarial Networks (GANs) and reinforcement learning, AI agents can design entirely novel molecular structures tailored to specific target profiles.2 These agents can explore vast chemical spaces, optimizing generated molecules for multiple parameters simultaneously, such as binding affinity, selectivity, solubility, metabolic stability, and bioavailability (ADMET properties).1 When I learned about this sophisticated capability of agent systems, my jaw dropped in amazement. Companies like Exscientia (using platforms historically including Centaur Chemist) 2, Insilico Medicine (with its Chemistry42 module) 80, and Recursion (with its LOWE agent) 83 have demonstrated capabilities in generating and optimizing drug candidates using AI. This approach moves beyond screening existing libraries to creating bespoke molecules with desired characteristics.

- Predictive Toxicology & ADMET: A major cause of drug failure is unforeseen toxicity or poor pharmacokinetic properties. AI agents are being deployed to predict these liabilities earlier in the discovery pipeline.1 By analyzing structural features, preclinical data, and known toxicological patterns, agents can flag potentially problematic compounds, allowing resources to be focused on more promising candidates.43 Schrödinger, for example, launched an initiative leveraging its physics-based platform combined with AI/ML to develop computational models for predictive toxicology against a panel of known off-target proteins associated with side effects.85 Early prediction significantly de-risks the development process. The company is developing a predictive toxicology platform, funded by the Bill & Melinda Gates Foundation, aimed at improving drug candidate properties and reducing development risks. The platform will go live in the 2nd half of 2025.

- Automated Literature Review & Hypothesis Generation: The exponential growth of scientific literature makes it impossible for human researchers to stay fully abreast of all relevant findings. AI agents can continuously monitor and process vast amounts of text from publications, patents, and clinical trial databases.43 They can extract key information, identify emerging trends, synthesize knowledge, generate novel hypotheses based on uncovered connections, and even suggest specific experiments to test these hypotheses.1 Causaly Discover, for instance, can provide automated alerts and summaries of new research in specified areas 8, while platforms like BenevolentAI use LLMs trained on scientific literature to predict targets.88 This capability acts as a powerful research assistant, augmenting human intellect and preventing valuable information from being overlooked.48

- Experimental Design & Automation (Towards Self-Driving Labs): AI agents are beginning to bridge the gap between in silico work and wet-lab experimentation. They can assist in designing experiments, optimizing protocols, and, increasingly, orchestrating automated laboratory workflows.10 This involves controlling robotic platforms for tasks like high-throughput screening, sample preparation, and data acquisition, forming closed-loop Design-Make-Test-Learn (DMTL) cycles.2 AI agents analyze experimental results in real-time, learn from them, and autonomously decide on the next steps, iterating towards a goal.53 Examples include Exscientia’s DMTL approach 2, Insilico Medicine’s fully robotic lab connected to its Pharma.AI platform 90, and Capgemini’s methodology using generative AI predictions to drastically reduce the number of experiments needed in protein engineering.92 This trend points towards the vision of “self-driving labs” or “AI scientists,” where autonomous systems conduct research with minimal human intervention, significantly accelerating discovery.10 The integration of multimodal data—combining genomics, proteomics, imaging, chemical structures, and clinical observations—is fundamental to the success of these advanced discovery applications, enabling a more holistic understanding of complex biology.2

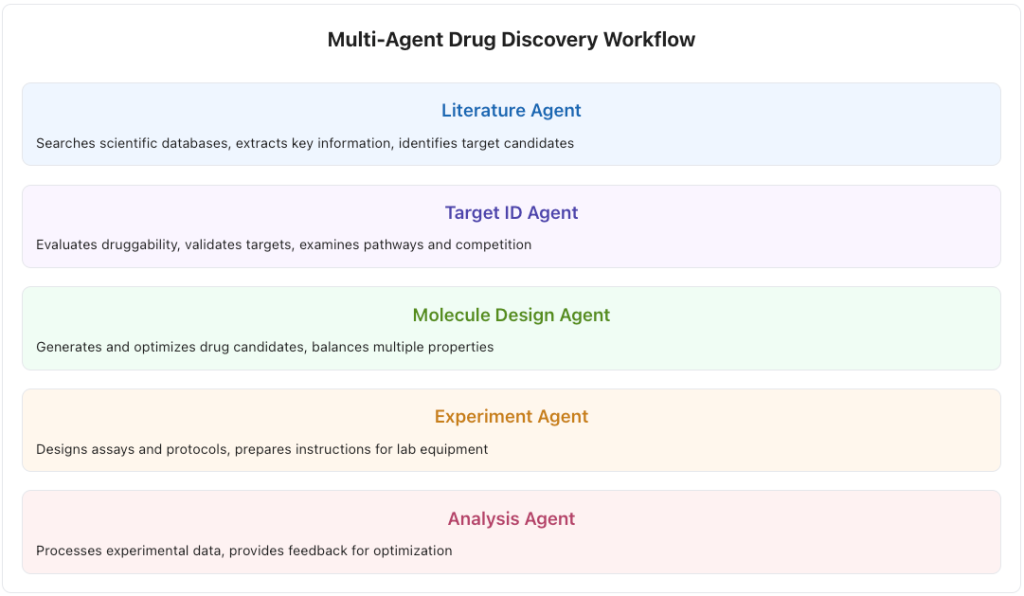

Figure 3: Process Flow Showing How Specialized Agents Collaborate Throughout the Drug Discovery Pipeline

Optimizing Clinical Trials: Design, Recruitment, and Management

Clinical trials remain a critical, lengthy, and expensive bottleneck in drug development. AI agents are being applied to streamline various aspects of this phase.

- Trial Design: Agents can analyze historical trial data, real-world evidence, and biological knowledge to help optimize clinical trial protocols.1 This includes selecting appropriate endpoints, determining optimal dosing regimens, and predicting potential trial outcomes based on specific design choices.2 This data-driven approach aims to design trials that are more likely to succeed and yield meaningful results. Some platforms, like Unlearn.AI, use AI to create “digital twins” to model patient progression, potentially reducing the size of control arms needed.94

- Patient Recruitment & Stratification: Identifying and enrolling suitable participants is a major challenge, with poor recruitment being a common cause of trial delays and failures.22 AI agents can significantly accelerate this process by automatically scanning and analyzing large datasets like electronic health records (EHRs), patient registries, and genomic databases to identify eligible candidates based on complex inclusion/exclusion criteria.2 They can also help identify optimal geographic locations for trial sites based on patient demographics and historical enrollment data.4 This targeted approach not only speeds up recruitment 22 but also allows for better patient stratification, potentially identifying subgroups most likely to respond to the treatment and improving trial diversity.9 Tools like Salesforce’s Agentforce are being developed for tasks like reviewing data and suggesting trial sites.97

- Trial Monitoring & Management: AI agents can enhance the ongoing management and monitoring of clinical trials. They can automate the collection of data from diverse sources, including EHRs, wearable devices, and electronic patient-reported outcomes (ePROs).9 Agents can continuously monitor this incoming data in real-time to track patient safety, ensure compliance with protocols, and detect anomalies or potential issues early, alerting researchers immediately.1 This capability allows for quicker responses to safety signals and ensures trial integrity. Furthermore, some AI systems can facilitate adaptive trial designs by analyzing interim results and suggesting protocol adjustments on the fly, potentially making trials more efficient and ethical.1 AI agents can also automate administrative tasks like scheduling and follow-up reminders.9

The application of AI agents in clinical trials promises greater efficiency, reduced costs, and a more patient-centric approach.2 However, their use demands rigorous attention to data quality, privacy, security, and the mitigation of potential biases in algorithms or data.33 Regulatory acceptance hinges on transparency, validation, and adherence to standards like CDISC.33 Explainable AI (xAI) methods may become increasingly important to build trust and facilitate regulatory review.101

Enhancing Manufacturing, Quality Control, and Supply Chain Resilience

Beyond R&D, AI agents are being deployed to optimize the complex operational aspects of pharmaceutical manufacturing and supply chain management.

- Manufacturing Process Optimization: Agents can monitor manufacturing processes in real-time using data streams from sensors and production systems.3 They can identify operational trends, detect anomalies indicative of potential problems (e.g., equipment wear and tear), and optimize resource management by adjusting production schedules or raw material allocation.3 Predictive maintenance, where agents forecast equipment failure and schedule repairs proactively, helps minimize costly downtime.3 Companies like Pfizer have reported using AI to boost product yield and reduce cycle times in manufacturing.102

- Quality Control (QC): Traditional QC often relies on sporadic manual checks. AI agents enable continuous, automated quality monitoring.3 By analyzing sensor data or images from production lines, agents can instantly detect deviations from quality standards, such as incorrect dosage formulation or contamination, and recommend or initiate corrective actions.3 This ensures higher product quality and reduces the risk of defective products reaching the market.

- Supply Chain Management: Pharmaceutical supply chains are complex and vulnerable to disruption. I experienced this first hand when I was working in R&D at the biotech darling, Genzyme, just as it experienced multiple fill-finish problems, particularly at its Allston, MA, US plant, which resulted in drug shortages and regulatory actions in 2010. These issues involved the final stage of manufacturing where drugs are filled into vials and packaged, the problems led to the rejection of batches due to quality issues and contamination, and this massive disruption led to the eventual opportunistic takeover of the company by Sanofi-Aventis. These are the types of business-destroying problems that AI Agents may prevent in the future.

- AI agents, often working within Multi-Agent Systems (MAS), are enhancing resilience and efficiency.3 They perform tasks like:

- Demand Forecasting: Analyzing historical data, market trends, and real-time signals to predict demand more accurately.11

- Inventory Management: Optimizing stock levels by comparing real-time inventory data with demand forecasts, identifying stock-out risks, initiating procurement, and reducing excess inventory and carrying costs.3

- Logistics Coordination: Orchestrating logistics, rerouting shipments based on real-time constraints, and ensuring efficient drug distribution.3

- M&A Due Diligence: Automating data gathering and analysis across clinical, regulatory, financial, and operational domains to support M&A decisions.4 Companies like ParkourSC are demonstrating across a range of industries how AI agents provide dynamic decision intelligence for real-time optimization and disruption prevention in life science supply chains.105 The integration with Internet of Things (IoT) sensors and the ability to process real-time data streams are crucial enablers for these applications.3 This shift towards active control and optimization requires platforms capable of real-time data ingestion and action triggering.

Streamlining Regulatory Processes and Compliance

The highly regulated nature of the biopharma industry presents significant opportunities for AI agents to automate and assist with complex compliance and documentation tasks.

- Regulatory Document Generation: Preparing extensive regulatory submissions like Investigational New Drug (IND) applications, New Drug Applications (NDAs), or Clinical Study Reports (CSRs) is a major bottleneck.4 AI agents can automate large parts of this process.2 This involves agents autonomously retrieving necessary data (clinical, non-clinical, CMC) from disparate systems, drafting reports using predefined regulatory templates, validating formatting and content against agency guidelines (e.g., FDA, EMA), checking for internal consistency, and flagging sections requiring human review.4 Bayer, for example, reported leveraging generative AI to automate up to 80% of regulatory dossier completion.2 This automation promises significant time savings and reduction in manual errors.

- Compliance Monitoring: AI agents can continuously monitor data from clinical trials or manufacturing processes to ensure adherence to regulatory standards (e.g., GxP, trial protocols).4 They can automatically flag deviations, potential compliance issues, or safety concerns, enabling timely intervention.9 During M&A due diligence, agents can be tasked with specifically extracting regulatory compliance issues from target company data.4

- Regulatory Intelligence: While less explicitly detailed in the source material, the complexity of navigating evolving global regulations 32 suggests a potential future role for AI agents in tracking changes, interpreting guidelines, and assisting companies (especially smaller ones with limited resources) in maintaining global compliance.

Regulatory affairs represents a high-potential area for agentic AI due to the structured, data-heavy, and rule-intensive nature of the work.4 However, the critical nature of these tasks means that accuracy, reliability, traceability, and validation are non-negotiable for regulatory acceptance.4 Platforms targeting this space must prioritize explainability (detailing agent reasoning), robust audit trails, rigorous validation processes, and potentially integration with Quality Management Systems (QMS).107 Adherence to emerging FDA and EMA guidance on AI use in drug development is also crucial.99

Powering Genomic Data Analysis and Personalized Medicine

The explosion of genomic and other ‘omics’ data presents both immense opportunities and significant analytical challenges. AI agents are becoming indispensable tools for translating this data into actionable insights for personalized medicine.

- Genomic Data Processing & Analysis: The sheer volume and complexity of genomic data make manual analysis infeasible.1 AI agents, powered by machine learning algorithms, can rapidly and accurately process these large datasets.43 They can identify complex patterns, correlations, genetic variants associated with specific diseases, predict disease susceptibility, and forecast treatment responses based on genomic profiles.1 Agents can also automate many of the repetitive tasks involved in genomic analysis, freeing up bioinformaticians and researchers.43

- Personalized Medicine: AI agents are central to realizing the vision of personalized medicine.1 By integrating and analyzing individual patient data from diverse sources – including genomic sequences, clinical records (EHRs), medical imaging, lifestyle information, and even real-time data from wearables – agents can help predict individual responses to therapies, identify potential adverse drug reactions, and recommend tailored treatment strategies.1 This allows for the development of medications targeted to specific genetic profiles, increasing efficacy and reducing side effects.1 Agentic AI integrated with wearables could even provide personalized health coaching and proactive monitoring.11

- Rare Disease Research: Developing treatments for rare diseases is often hampered by limited patient populations and sparse data.1 AI agents can be particularly valuable here by extracting maximum insight from available genomic and clinical data.1 They can help identify potential therapeutic targets or repurpose existing drugs for these conditions. Techniques like using generative AI to create synthetic training data, as demonstrated by Bayer with histology images, can help overcome data limitations in diagnostics for rare diseases.2 Companies like Healx specifically focus their AI platforms on accelerating rare disease drug discovery.23

The power of AI agents in genomics and personalized medicine stems from their ability to handle massive, multimodal datasets and uncover complex biological relationships.1 This necessitates platforms with strong capabilities in data integration, harmonization, and analysis, incorporating sophisticated bioinformatics tools and potentially specialized AI models trained on biological data (e.g., protein structure prediction models like AlphaFold 22). Given the sensitivity of patient data, robust data privacy, security measures, and ethical considerations are paramount.11

The Competitive Landscape: AI Agent Platforms and Providers (April 2025)

The market for AI agents and enabling platforms in biotech and pharma is dynamic and rapidly evolving, characterized by significant investment, diverse players, and ongoing technological advancements. As of April 2025, the landscape includes AI-native biotechs driving end-to-end discovery, specialized platform providers, large technology companies offering foundational tools and infrastructure, and traditional players integrating AI capabilities.

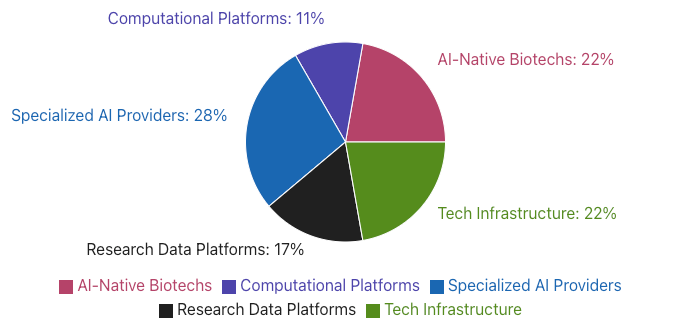

Figure 4: Distribution of AI Agent Companies Across Different Categories in the Biotech Ecosystem

Dominant Players: Profiles and Offerings

Several companies have established themselves as significant forces, either by developing comprehensive AI-driven R&D platforms or by providing widely adopted computational tools increasingly incorporating AI.

- Recursion Pharmaceuticals (including Exscientia): Positioned as a leading “TechBio” company, Recursion aims to industrialize drug discovery through its Recursion Operating System (OS).83 The OS integrates large-scale wet-lab automation (generating phenomic, transcriptomic, proteomic data) with massive proprietary datasets (>60PB) 83, advanced AI/ML models (including foundation models like Phenom-2 and LLM agents like LOWE) 69, and supercomputing infrastructure (BioHive-2).69 Its focus is on hypothesis-agnostic mapping of biological relationships for target identification, molecule design, and preclinical development.69 Recursion has a growing internal pipeline and major partnerships with Roche, Bayer, Merck KGaA, Sanofi, and technology providers like NVIDIA and Google Cloud.14 The recent merger with Exscientia (completed Nov 2024 122) added significant capabilities in precision chemistry design, automated synthesis, and patient-centric approaches, strengthening the end-to-end platform.122

- Relevance for Small Biotechs: While primarily focused on its internal pipeline and large pharma partnerships, Recursion has shown some initiatives potentially relevant to smaller players. It anchored a pre-seed venture fund via its Altitude Lab accelerator specifically for SBIR-reviewed startups impacted by funding shifts.120 Its collaboration with Enamine to release AI-curated screening libraries makes specific outputs of its platform available commercially.131 However, direct access to the full Recursion OS for small external labs appears limited currently.

- Insilico Medicine: A prominent AI-native company with an end-to-end generative AI platform, Pharma.AI.25 This platform comprises integrated modules: PandaOmics™ for target discovery (analyzing omics, publications, trials) 136, Chemistry42™ for de novo small molecule generation and optimization 80, and inClinico™ for predicting clinical trial outcomes.77 Insilico emphasizes its use of generative AI (including GANs, reinforcement learning, LLMs like Nach01, and multi-agent assistant DORA) 90 and has integrated its AI with a fully robotic lab (Life Star1) for automated experimentation.82 The company has rapidly advanced its internal pipeline, notably bringing an AI-discovered and AI-designed drug for Idiopathic Pulmonary Fibrosis (IPF) into Phase II trials 135, and has secured major partnerships with Sanofi, Fosun Pharma, EQRx, and others.22

- Relevance for Small Biotechs: Insilico offers software licenses for its platform components (PandaOmics, Chemistry42) alongside collaborations.91 User testimonials suggest PandaOmics is usable and effective for target ID.136 The platform’s potential for significant cost and time reduction (e.g., $2M preclinical candidate cost cited historically 135) is attractive. However, substantial funding rounds ($110M Series E in March 2025 90) suggest an enterprise focus, and specific pricing for small startups is not readily available. Demo videos show a potentially user-friendly interface for specific tasks like peptide design.143

- Schrödinger: A long-standing leader in computational chemistry and molecular modeling, Schrödinger provides a comprehensive, physics-based software platform used extensively across academia and industry, including 19 of the top 20 pharma companies.12 Its tools (Maestro, Glide, FEP+, LiveDesign, etc.) support small molecule and biologics discovery, materials science, and drug formulation.145 While historically emphasizing physics-based simulation, Schrödinger is increasingly integrating AI and machine learning to enhance its platform, particularly in areas like predictive toxicology (funded partly by the Gates Foundation).85 The company also offers collaborative drug discovery services and develops its own internal pipeline.24

- Relevance for Small Biotechs: Schrödinger appears to be one of the more accessible platforms for smaller organizations. They explicitly offer “Customizable packages for biotech startups” 147 and tiered pricing, with one source suggesting a $1,500/month starting point for small startups.150 They also provide academic discounts, free trials, extensive training resources (online courses, tutorials, workshops), and dedicated scientific support.145 This makes it a strong contender for small teams needing powerful computational tools, although the platform’s complexity might still present a learning curve.

- BenevolentAI: Focuses on leveraging AI, particularly its biomedical Knowledge Graph and NLP capabilities, to mine vast amounts of scientific data (literature, patents, clinical data) and identify novel drug targets.22 The Benevolent Platform™ aims to accelerate target identification and validation, supporting scientists and executives in making higher-confidence R&D decisions.88 The company has partnerships with large pharma (historically AstraZeneca, Merck) and an internal pipeline.22 They possess wet-lab facilities for validation.88

- Relevance for Small Biotechs: BenevolentAI offers API access to integrate its technology into partners’ platforms 156, which could be relevant for tech-savvy startups. However, their primary business model seems geared towards large collaborations.88 Pricing information for direct platform access or API use by small labs is generally listed as “Contact for Pricing” 161, suggesting bespoke enterprise deals rather than standardized startup tiers. Historical academic collaborations exist 154, but broad academic/startup programs are not highlighted.

- Atomwise: Specializes in structure-based drug discovery using its AtomNet™ platform, which employs deep learning (specifically convolutional neural networks) to predict drug-target interactions and screen vast virtual libraries (over 3 trillion compounds mentioned).23 They aim to make drug discovery more rational and efficient compared to serendipitous methods.28 Atomwise collaborates extensively with both academic institutions and industry partners (e.g., Sanofi).25

- Relevance for Small Biotechs: Atomwise offers services, licensing, and collaborative research projects.167 Their AIMS (Artificial Intelligence Molecular Screen) awards program historically provided academic and non-profit researchers with virtual screening and compounds at no cost 168, indicating a potential pathway for non-commercial access. However, commercial pricing for small biotech startups is not specified. A usability study rated Atomwise highly alongside Insilico’s Pharma.AI.169

- Causaly: Provides an AI platform focused on extracting causal relationships and insights from biomedical literature and data.8 Key components include a highly precise Knowledge Graph (Bio Graph) distinguishing causality from correlation, an Enterprise Data Fabric for integrating internal/external data, and a Generative AI Copilot for answering complex research questions with verifiable sources.171 Their ‘Discover’ offering incorporates agentic AI for autonomous research, report generation, and monitoring new publications.8 They target research scientists, AI/Tech teams, and data teams in pharma/biotech.171

- Relevance for Small Biotechs: Causaly’s focus on literature analysis and hypothesis generation addresses a key pain point for researchers. The platform offers API access (Bio Graph API) 171, potentially useful for integration. However, like BenevolentAI, pricing details for small labs are not readily available (often “Contact for Pricing” or based on funding rounds 174), suggesting an enterprise focus. Its SaaS model 176 might offer some flexibility compared to large upfront licenses.

- Benchling: A widely adopted R&D Cloud platform that unifies ELN, LIMS, registry, inventory, workflow management, and insights capabilities.177 While not exclusively an AI drug discovery platform, it provides the critical data foundation and workflow orchestration capabilities essential for effective AI integration in labs.181 It emphasizes codeless configuration, extensive integration capabilities (instruments, software via APIs, Developer Platform), and collaboration features.178 Benchling is used by a wide range of organizations, from startups to large pharma and academia.180 They are incorporating AI tools to enhance productivity within the platform.182

- Relevance for Small Biotechs: Benchling is explicitly used by many startups 182 and addresses key pain points around data silos and ELN/LIMS integration.39 Its focus on usability and codeless configuration 184 is beneficial for teams without extensive IT support. However, users have noted that costs can increase rapidly as teams scale, and frustrations exist with workflow adaptability and data migration.39 Its integration capabilities are crucial for building a connected lab ecosystem suitable for AI agents.

- Large Tech/Cloud Providers (Google, NVIDIA, AWS, Microsoft): These companies are dominant enablers rather than direct platform providers in the same vein as the biotechs. Google offers Vertex AI, specialized life science suites (Target/Lead ID, Multiomics), foundational models (Gemini, AlphaFold via DeepMind/Isomorphic), and infrastructure.24 NVIDIA provides the essential hardware (GPUs), the BioNeMo framework and NIM microservices for biomolecular AI, and DGX Cloud.14 AWS and Microsoft Azure offer scalable cloud compute, storage, and AI/ML services (SageMaker, Bedrock, Azure ML) along with marketplaces featuring partner solutions.102

- Relevance for Small Biotechs: These providers offer essential building blocks. Cloud services provide scalable infrastructure without massive capital expenditure.190 Marketplaces offer access to specialized tools.192 Open-source contributions (like BioNeMo 26) can lower barriers. However, effectively utilizing these requires significant technical expertise.36

Table 1: Comparison of Selected AI Platforms for Biotech/Pharma (April 2025)

| Company/Platform | Key AI Agent Capabilities | Technology Focus | Primary Target Market | Integration Focus | Known Access/Pricing Models | Key Partnerships/Customers |

| Recursion (incl. Exscientia) | Target ID, Molecule Gen/Opt, Workflow Automation (LOWE agent), Automated Synthesis | Agentic AI, ML/DL, Robotics, Large Datasets, Precision Chemistry | Large Pharma (Partnerships), Internal Pipeline | Wet Lab Automation, Cloud, Data | Partnership-driven; Accelerator/Fund for startups; Library collaboration (Enamine) | Roche, Bayer, Merck KGaA, Sanofi, NVIDIA, Google Cloud, BMS (via Exscientia) 69 |

| Insilico Medicine | Target ID (PandaOmics), Molecule Gen (Chemistry42), Clinical Trial Prediction (inClinico) | Generative AI (GANs, LLMs), Robotics, End-to-End Platform | Large Pharma (Partnerships), Internal Pipeline | Robotic Lab, Data | Partnership-driven; Software Licensing (PandaOmics, Chemistry42) 91 | Sanofi, Fosun, EQRx, Astellas, Pfizer (collaborations/licenses) 22 |

| Schrödinger | Molecule Design/Opt, Predictive Toxicology (AI-enhanced), Simulation | Physics-based Simulation, Increasingly AI/ML integrated | Large Pharma, Biotech (all sizes), Academia | Software Suite, Cloud | Tiered Subscriptions, Startup Packages ($1500/mo?), Academic Discounts, Free Trials, Training 147 | 19/20 Top Pharma, Bayer, Lilly, Novartis, BMS, Takeda, Sanofi (collaborations/licenses) 24 |

| BenevolentAI | Target ID, Knowledge Discovery, Hypothesis Generation | Knowledge Graph, NLP, ML | Large Pharma (Partnerships), Internal Pipeline | API, Data | Partnership-driven; API access offered; Pricing often “Contact Us” 156 | AstraZeneca, Merck (collaborations) 88 |

| Causaly | Autonomous Research (Discover agent), Knowledge Extraction, Question Answering (Copilot) | Knowledge Graph, Generative AI (Copilot), Agentic AI (Discover), Data Fabric | Pharma/Biotech R&D, AI/Tech, Data Teams | API (Bio Graph), Internal Data Sources | SaaS Model; Pricing often “Contact Us”; VC Funded 174 | Novo Nordisk 79 |

| Atomwise | Hit ID (Virtual Screening), Drug-Target Interaction Prediction (AtomNet) | Structure-based Deep Learning | Pharma, Biotech, Academia | Chemical Libraries | Services, Licensing, Collaborations; AIMS program (historical free access for non-profits) 167 | Sanofi, hundreds of academic institutions 25 |

| Benchling | Workflow Automation, Data Management (AI-enhanced productivity tools) | R&D Cloud Platform (ELN/LIMS), Data Modeling, Integration | Biotech (all sizes), Large Pharma, Academia | ELN/LIMS, Instruments, Software (API) | Subscription-based; Costs can scale with team size 39 | >1300 companies including startups & >50% Top 50 Pharma, Absci 182 |

| Google Cloud / Isomorphic Labs | Foundational Models (AlphaFold, Gemini), Target/Lead ID Suite, Cloud AI Services | Generative AI, LLMs, Cloud Infrastructure, Specialized Life Science Solutions | Broad (Cloud); Large Pharma (Isomorphic Partnerships) | Vertex AI, GKE, Cloud Infrastructure | Cloud Services (PAYG/Commitments); Marketplace; Isomorphic (Partnership) 188 | Lilly, Novartis (Isomorphic); Servier, Recursion, Ginkgo (Google Cloud) 126 |

| NVIDIA | Foundational Models (via BioNeMo), GPU Acceleration, Cloud Platform | Accelerated Computing, AI Frameworks (BioNeMo), NIM Microservices | AI Developers, Researchers, Pharma/Biotech | DGX Cloud, Partner Platforms | Hardware sales; Software/Framework (Open Source BioNeMo) 26 | Recursion, Genentech, numerous techbios/pharma using BioNeMo 26 |

| AWS / Microsoft Azure | Cloud Compute, Storage, AI/ML Services (SageMaker, Bedrock, Azure ML), Marketplaces | Cloud Infrastructure, AI Platform Services | Broad (Cloud Services) | Cloud Infrastructure, Partner Tools | Cloud Services (PAYG/Commitments); Marketplace 192 | Pfizer (AWS), Manas AI (Microsoft) 102 |

Note: Capabilities and pricing models are based on available information as of April 2025 and may evolve. “Agentic” capabilities refer to autonomous goal-directed task execution.

Emerging Challengers and Niche Innovators

Beyond the established players, a vibrant ecosystem of startups and specialized companies is contributing to the AI agent landscape in biotech and pharma. This segment is characterized by significant VC funding, diverse technological approaches, and often a focus on specific niches or stages of the value chain.197

- Well-Funded Recent Startups: The period from 2024 into early 2025 saw substantial investment rounds, although overall funding dipped slightly from previous peaks.197 Notable examples include Google’s Isomorphic Labs ($600M) 141, Eikon Therapeutics ($351M) 197, and numerous others securing tens to hundreds of millions (e.g., AIRNA, Character Biosciences, Hippocratic AI, Abridge).197 While impressive, many of these heavily funded entities are primarily focused on developing their own drug pipelines using AI, rather than offering their platforms externally, especially to smaller biotechs.23

- Specialized Platform/Technology Providers: Several emerging companies offer unique AI technologies or platforms targeting specific needs:

- Generative Biology/Protein Design: Generate Biomedicines 28, Cradle Bio 28, DenovAI Biotech 27, Latent Labs.28

- Rare Diseases/Repurposing: Healx 23, Ignota Labs 206, Every Cure.64

- Specific Modalities/Areas: Verge Genomics (neurology) 16, Enveda Biosciences (natural products) 23, Xbiome (microbiome) 95, Nanograb (nanoparticles) 206, Anima Biotech (mRNA modulators).28

- Computational/Platform Focus: Iktos (design & robotics) 28, Standigm (target ID, lead design, repurposing) 16, Genesis Therapeutics (physics+AI) 95, Model Medicines (antivirals, generative AI) 16, DeepMirror (generative AI hit-to-lead) 207, Cyclica (polypharmacology).25

- Bioinformatics/Data Platforms: Ardigen 23, Genedata 12, PathAI (pathology).23

- Contract Research Organizations (CROs) with AI Offerings: Recognizing the demand, traditional CROs and specialized service providers are increasingly incorporating AI into their offerings. Examples include Charles River Labs 12, Evotec 12, Aurigene Services (with its Aurigene.AI platform integrating predictive/generative AI and CADD) 29, Immunocure (AxDrug generative AI platform) 30, Fujifilm (drug2drugs AI service) 31, Proscia (digital pathology AI for CROs) 209, and Viva Biotech (AIDD/CADD services).210 These CROs can provide smaller biotechs access to AI capabilities on a project basis without needing to license or build platforms themselves.

This diverse challenger landscape signifies rapid innovation across multiple fronts. However, for a small biotech seeking an AI agent platform, the challenge lies in identifying providers whose offerings align with their specific needs, budget, and technical capabilities, distinguishing between true platform providers and drug developers using AI internally.

The Role of Cloud Platforms (AWS, Google Cloud) and Open Source Toolkits (DeepChem, RDKit)

The accessibility of powerful AI tools and infrastructure has been significantly democratized by cloud computing platforms and the open-source software movement, playing a crucial role, especially for resource-constrained organizations.

- Cloud Platforms (AWS, Google Cloud, Azure): Major cloud providers offer the scalable computational power (CPUs, GPUs, TPUs) and storage necessary for demanding AI workloads in biotech, eliminating the need for large upfront investments in on-premises hardware.190 Beyond basic infrastructure, they provide specialized AI/ML services like Amazon SageMaker, Google Vertex AI, Azure Machine Learning, and access to foundation models like Google’s Gemini or via Amazon Bedrock.14 They also host healthcare-specific solutions (e.g., Google’s Healthcare Data Engine, Target/Lead ID Suite) 188 and marketplaces where third-party vendors offer specialized biotech AI tools and services.192 This allows smaller companies to access cutting-edge capabilities on a pay-as-you-go basis.188

- Open Source Toolkits: The open-source community provides powerful, freely available software libraries that form the backbone of many computational chemistry and AI drug discovery efforts.

- RDKit: A fundamental cheminformatics toolkit written in C++ with Python wrappers, widely used for reading, writing, manipulating, and analyzing chemical structures (SMILES, MOL files), calculating molecular descriptors and fingerprints, and generating 2D/3D coordinates.166 It’s a prerequisite or integrated component in many higher-level tools.215

- DeepChem: A Python library specifically aimed at democratizing deep learning for drug discovery, materials science, and biology.215 It integrates with major ML frameworks (TensorFlow, PyTorch, JAX) and cheminformatics tools like RDKit.215 DeepChem provides implementations of various featurization methods (e.g., ECFP, Graph Convolutions), ML models, data handling utilities (MoleculeNet datasets, splitters), and tutorials for tasks like solubility prediction, protein-ligand interaction modeling, and generative modeling.215

- Other Initiatives: Projects like NVIDIA’s open-source BioNeMo framework 26, collaborative efforts like the AI for Drug Discovery Open Innovation Forum 226, and industry consortia like Pharmaverse 227 further promote open standards and tool sharing. Many research groups also release their code on platforms like GitHub.216

While these resources drastically lower the barrier to entry for accessing compute power and AI algorithms, effectively leveraging them still demands considerable expertise.231 Small biotechs often lack the necessary data scientists, bioinformaticians, cheminformaticians, and cloud engineers to build, validate, and maintain complex workflows using these components.36 Therefore, while cloud and open-source tools are essential enablers, there remains a need for platforms that can abstract away some of this complexity for non-expert users in smaller labs.

Market Size, Growth Trends, and Investment Landscape

The market for AI in drug discovery and biotechnology is characterized by rapid growth, significant investment, and evolving regional dynamics.

- Market Size and Growth: Numerous market research reports project substantial growth, although specific figures vary. Common estimates place the 2024/2025 market size in the range of approximately $1.5 billion to $7 billion USD, with projected growth reaching anywhere from $7 billion to over $16 billion (and sometimes much higher for broader AI in pharma/biotech segments) by the early 2030s.12 Compound Annual Growth Rates (CAGRs) are consistently high, often ranging from ~10% to over 30%, indicating strong market momentum driven by the perceived value of AI in accelerating R&D, reducing costs, and enabling personalized medicine.12 Generative AI is seen as a particularly potent driver, with potential economic value estimated in the tens to hundreds of billions annually for the pharma industry.22

- Regional Trends: North America, particularly the United States, currently dominates the market, accounting for the largest revenue share (estimates range from 40%+ to over 56%).12 This dominance is fueled by a strong presence of major pharmaceutical and biotech companies, leading AI technology providers, significant R&D spending, substantial government and VC funding for AI research, and favorable regulatory encouragement.12 Europe is the second-largest market.12 However, the Asia-Pacific region, led by countries like China, Japan, India, and South Korea, is consistently identified as the fastest-growing market, driven by expanding biotech hubs, increased government support, rising healthcare investments, and growing AI adoption.12

- Investment Landscape: The AI sector, in general, has attracted massive venture capital investment, becoming a leading sector globally in 2024.200 Healthcare and biotech AI specifically saw significant funding growth, with biotech AI attracting billions in 2024.200 This trend continued into early 2025, with large “megarounds” becoming more common.197 High-profile examples include Isomorphic Labs’ $600M round 141, Anthropic’s $3.5B round (relevant as a foundational model provider) 237, and Insilico Medicine’s $110M Series E.90 Major investors include large VC firms (e.g., Thrive Capital, a16z), sovereign wealth funds (GIC), and strategic corporate investors (e.g., NVIDIA, pharma venture arms).126 Mergers and acquisitions are also shaping the landscape, exemplified by the Recursion-Exscientia combination.28 This intense investment activity underscores the high expectations for AI’s impact but also suggests potential market volatility and future consolidation.

Challenges and Needs of Small Biotech Research Teams

While AI agents offer transformative potential, their practical adoption and effective utilization by small biotech research teams are hindered by a unique set of interconnected challenges related to workflow complexity, resource limitations, and data hurdles. Understanding these specific pain points is crucial for identifying opportunities for tailored platform solutions.

Navigating Complex R&D Workflows with Limited Resources

The drug discovery and development process is inherently complex, iterative, and lengthy, often described by the Design-Make-Test-Analyze (DMTA) cycle.240 This process can take over a decade and involves numerous stages, from initial target identification and validation, through lead optimization, preclinical testing, multi-phase clinical trials, and regulatory submission.32 Small biotech companies, often founded around specific scientific innovations or platform technologies, must navigate this entire complex pathway.241I can’t stress how important it is to “close the loop” in this cycle, collect all the data, and feed that data back into informing the next cycle.

However, small biotechs typically operate with lean structures and small teams.71 This means individual scientists often wear multiple hats, taking on responsibilities beyond pure bench science, such as literature review, data management, and project coordination.34 Unlike large pharma, they rarely have dedicated research librarians, information managers, or large informatics teams.34 This limited personnel bandwidth makes managing the multifaceted R&D workflow extremely challenging.

Furthermore, small biotechs are under intense pressure to achieve critical milestones rapidly to secure subsequent funding tranches.33 Delays caused by workflow inefficiencies can be particularly detrimental. Common inefficiencies in resource-constrained environments include:

- Documentation Bottlenecks: Prioritizing experiments over documentation leads to backlogs, potentially resulting in incomplete or low-quality data records as details are forgotten.240 Time spent on manual literature searching and management also detracts from core research.34

- Informal/Fragmented Data Practices: Using transient storage (network folders, USB drives) or informal recording methods (Post-It notes) risks data loss, lacks context, and hinders accessibility.240

- Coordination Challenges: Reliance on external partners like CROs or consultants, while necessary to fill expertise gaps, introduces complexities in managing relationships, ensuring data consistency, and retaining knowledge within the startup.96

- Strategic Focus: Platform technology companies face the challenge of identifying and validating the core applications to focus on with limited resources.241 Poor project selection or continuing failing projects due to sunk cost fallacy can waste precious resources.56

These workflow and resource challenges mean that small biotechs desperately need tools that streamline processes, automate routine tasks, facilitate seamless collaboration (both internal and external), and minimize the administrative burden on scientists, allowing them to focus on innovation under tight timelines and budgets.

The Resource Gap: Budget, Compute Power, and Specialized Talent

Beyond workflow complexities, small biotechs face significant gaps in fundamental resources required to leverage advanced AI technologies effectively.

- Budget Constraints: Drug development is inherently expensive, with average costs per approved drug often cited in the billions of dollars when accounting for failures.32 Startups rely heavily on venture capital or partnerships, and funding can be precarious, especially in fluctuating economic climates.96 This makes large upfront investments in sophisticated AI platforms or infrastructure difficult. The high cost of implementing and maintaining AI systems (including software licenses, hardware, and integration) is a primary barrier to adoption for SMEs.22 Even essential informatics tools like ELNs and LIMS can have prohibitive or opaque pricing structures with hidden costs for customization or integration, straining limited budgets.39

- Compute Power: Modern AI, particularly deep learning and large-scale simulations used in drug discovery, requires significant computational resources, often necessitating High-Performance Computing (HPC) clusters.119 Most small companies lack the capital and expertise to build and maintain such infrastructure in-house.191 Cloud computing offers a viable alternative, providing scalable, pay-as-you-go access to powerful computing resources.190 However, effectively managing cloud costs and configuring complex cloud environments still requires technical know-how.119 Emerging technologies like quantum computing promise further breakthroughs but are currently far from accessible or practical for most startups.54

- Specialized Talent: Perhaps the most critical resource gap is human expertise. Successfully implementing and utilizing AI in biotech requires interdisciplinary skills combining domain knowledge (biology, chemistry, medicine) with data science, machine learning, bioinformatics, and software engineering.22 There is a recognized shortage of professionals with this specific skillset.22 Small biotechs struggle to compete with large pharmaceutical companies and tech giants for this limited talent pool.36 Consequently, many small teams lack dedicated data scientists or bioinformatics experts 191, making it challenging to adopt, validate, customize, and interpret the outputs of complex AI tools.

This “trifecta” of constraints – budget, compute, and talent – creates a formidable barrier, preventing many small biotechs from fully harnessing the power of AI and potentially widening the competitive gap with larger organizations.34 Solutions targeting this segment must therefore be designed to mitigate these constraints, offering affordability, abstracting computational complexity, and minimizing the need for deep in-house AI expertise for routine use.

Data Hurdles: Silos, Quality, Integration, and AI-Readiness

Data is the lifeblood of AI, yet managing scientific data effectively poses significant challenges for small biotech teams, often representing the most substantial bottleneck to AI adoption.

- Data Silos: Information critical for R&D is frequently fragmented and isolated across various systems and formats. This includes experimental data in ELNs, sample information in LIMS, instrument output files (often in proprietary formats), analysis results in spreadsheets, data from CRO partners, and unstructured information in literature or reports.4 A major source of frustration and inefficiency is the poor integration between core lab systems like ELNs and LIMS, and between these systems and laboratory instruments.39 This lack of connectivity necessitates manual data transfer, increases the risk of errors, and prevents a holistic view of research data.249

- Data Quality & Standardization: AI models are highly sensitive to the quality of their training data. Inconsistent, incomplete, inaccurate, or poorly annotated data can lead to unreliable models and flawed conclusions.35 Small labs, often lacking standardized data capture protocols or dedicated data curation resources, are particularly susceptible to these issues.33 The lack of adherence to industry data standards (like CDISC for clinical data, or potentially FAIR principles – Findable, Accessible, Interoperable, Reusable – for research data) further complicates data integration, sharing, and regulatory submissions.33 Handling the diversity of scientific data modalities (genomics, proteomics, imaging, chemical structures, text) also requires specialized tools and expertise.37

- Data Volume & Management: Modern biotech research, especially involving genomics, high-throughput screening, or imaging, generates massive datasets (petabytes are mentioned in relation to large datasets or platforms like Recursion’s).37 Storing, managing, and processing this volume requires significant infrastructure and efficient data management strategies.119 Relying on manual processes for data handling is time-consuming, error-prone, and simply not scalable.58 This necessitates the adoption of solutions like data lakes, data warehouses, or integrated “lakehouses,” along with automated data pipelines for transferring and processing data from instruments to analysis platforms.190

- AI-Readiness: Raw scientific data is rarely suitable for direct input into AI models. It needs to be cleaned, curated, normalized, structured, and potentially transformed into specific formats (e.g., feature vectors, embeddings, graphs).37 This data preparation phase can be extremely time-consuming (consuming up to 80% of a data scientist’s time in some estimates 258) and requires specialized skills that small teams often lack. Ensuring data adheres to FAIR principles is also critical for its long-term value and usability by AI systems.208

These pervasive data challenges underscore that any AI agent platform aiming to serve small biotechs must incorporate robust, user-friendly data management capabilities as a core feature. This includes tools for seamless integration, automated ingestion and standardization, quality control, and preparation of data for AI consumption, effectively breaking down silos and creating a reliable data foundation.

Barriers to AI Tool Adoption in Smaller Labs

Synthesizing the challenges discussed above, several key barriers specifically impede the adoption of AI agent tools by small and medium-sized biotech laboratories:

- Prohibitive Cost: The high upfront and ongoing costs associated with acquiring, implementing, customizing, and maintaining sophisticated AI platforms, required infrastructure, and associated software licenses are often beyond the reach of tightly budgeted startups.22

- Lack of Specialized Expertise: Small teams typically lack the dedicated personnel with the necessary blend of AI/ML, data science, bioinformatics, cheminformatics, and domain expertise required to effectively select, validate, deploy, manage, and interpret the results from complex AI tools.22

- Data Infrastructure and Quality Issues: Pre-existing problems with data silos, inconsistent data quality, lack of standardization, and difficulties integrating data from various lab systems (ELNs, LIMS, instruments) make it challenging to create the high-quality, unified datasets required for training and running AI agents effectively.33

- Computational Infrastructure Demands: The need for significant computational power (HPC) for training and running advanced AI models presents a barrier for labs lacking in-house clusters or the expertise/budget to manage scalable cloud resources effectively.36

- Trust, Validation, and Interpretability: Concerns about the “black box” nature of some AI models, the difficulty in validating their outputs rigorously, ensuring reproducibility, and interpreting their predictions hinder trust and adoption, particularly in a regulated environment.35 Skepticism regarding the accuracy and reliability of AI outputs persists.86

- Usability and Workflow Integration: Many existing AI tools or platforms may not be designed with the bench scientist end-user in mind, featuring complex interfaces or requiring coding skills.40 Integrating these tools smoothly into existing, often already complex, lab workflows without causing disruption is a significant challenge.36 Organizational resistance to change can also play a role.41

- Regulatory Uncertainty: The evolving nature of regulatory guidelines from bodies like the FDA and EMA regarding the use and validation of AI/ML in drug development creates uncertainty and potential compliance hurdles for companies, especially smaller ones lacking dedicated regulatory expertise.22

Overcoming these barriers is essential for enabling smaller biotechs, which are significant drivers of innovation 96, to benefit from the productivity gains promised by AI agents.

Evaluating Current AI Agent Platforms for Small Biotech Needs

Assessing the suitability of existing AI agent platforms and related tools for small biotech research teams requires evaluating them against the specific challenges and constraints identified in Section 5. As of April 2025, while many powerful platforms exist, significant gaps remain in meeting the unique requirements of smaller organizations.

- Dominant AI-Native Biotech Platforms (Recursion, Insilico, BenevolentAI, Atomwise):

- Strengths: These companies possess cutting-edge AI technology, often integrated with extensive proprietary datasets and sometimes wet-lab capabilities, demonstrating potential for significant R&D acceleration.28 They showcase end-to-end capabilities from target ID to preclinical candidates.82 Some have shown high performance in usability/accuracy benchmarks.169

- Weaknesses for Small Biotechs: Their primary business models often revolve around high-value partnerships with large pharma or developing their own internal pipelines.91 Direct platform access for smaller companies is often unclear or likely expensive, with pricing typically requiring direct contact rather than transparent tiers.161 While some offer APIs 156, leveraging these effectively still requires significant in-house technical expertise. Atomwise’s historical AIMS program for non-profits 168 and Recursion’s accelerator fund 130 are exceptions but don’t represent broad platform access.

- Computational Science Platforms (Schrödinger, BIOVIA):

- Strengths: Schrödinger offers a mature, widely used platform with a strong physics basis, increasingly incorporating AI.85 They explicitly offer customizable packages and tiered pricing potentially suitable for startups 147, along with extensive training and support.151 BIOVIA also integrates generative AI (GTD) and ML tools into its platform.265

- Weaknesses for Small Biotechs: These platforms can be complex, requiring significant training and potentially computational chemistry expertise to use effectively.59 While Schrödinger offers startup pricing, the cost may still be substantial for very early-stage companies. Their core strength remains in molecular modeling and simulation, potentially lacking the broader agentic workflow automation capabilities seen in some AI-native platforms.

- Specialized Platforms (Causaly, Benchling, SciSpot):

- Strengths: Causaly provides powerful AI for knowledge discovery and hypothesis generation, addressing a key research bottleneck, and offers agentic features like ‘Discover’.8 Benchling excels at providing a unified data foundation and workflow management system, crucial for AI-readiness, with strong integration capabilities and usability favored by many startups over the past decade.181 SciSpot is a newer player from Canada, but offers very good data infrastructure options, a strong AI Agent platform and has good reviews from users with affordable price points for small biotechs.

- Weaknesses for Small Biotechs: Causaly’s pricing and access model for small labs is unclear.161 Benchling, while popular, can become expensive as labs scale, and some users report frustrations with adaptability.39 Neither platform, on its own, currently offers the full spectrum of autonomous agent capabilities across the entire R&D workflow (e.g., controlling lab robots or fully automating multi-step discovery campaigns). Though SciSpot does offer a full spectrum of autonomous agent capabilities, they are a less established platform and brand next to an incumbent like Benchling.

- Cloud Providers (AWS, Google Cloud) & Open Source (DeepChem, RDKit):

- Strengths: Offer unparalleled scalability, access to compute resources, and foundational AI models/tools at potentially lower entry costs.26 Open source removes licensing fees entirely.51 Marketplaces provide access to diverse third-party tools.192

- Weaknesses for Small Biotechs: Require significant in-house technical expertise (AI/ML, data engineering, cloud architecture, cheminformatics) to integrate, manage, validate, and utilize effectively.36 Building a cohesive agentic platform from these components is a major undertaking, far beyond the capabilities of most small research teams. Usability for non-expert scientists is often low without significant abstraction layers.51

- CROs with AI Services:

- Strengths: Provide access to AI capabilities and expertise on a project basis, potentially suitable for specific tasks without platform investment.29

- Weaknesses for Small Biotechs: Can be expensive for ongoing needs. Data ownership and integration back into the startup’s systems can be challenging. Less control over the process compared to an in-house platform.

Overall Assessment: No single existing solution perfectly addresses the needs of small biotech research teams for a cohesive, user-friendly, cost-effective AI agents platform. Dominant players are often too expensive or partnership-focused. Specialized platforms cover only parts of the workflow. Cloud/open-source requires too much expertise. CROs offer services, not integrated platforms. Schrödinger comes closest in terms of offering accessible software packages and support for startups, but its core focus remains computational modeling rather than broad agentic workflow automation. This gap highlights a clear market opportunity.

Opportunity Analysis: A Cohesive AI Agents Platform for Small Biotechs

The analysis of AI agent capabilities, the competitive landscape, and the specific pain points of small biotech research teams reveals a significant and underserved market niche. A substantial opportunity exists to develop and offer a cohesive AI agents platform explicitly designed to meet the unique requirements and overcome the constraints faced by these smaller, innovative organizations.

Unmet Needs:

Small biotech teams require solutions that bridge the gap between powerful but complex/expensive enterprise platforms and fragmented, expertise-intensive component tools (cloud services, open-source libraries). Their key unmet needs include:

- Affordability and Transparent Pricing: Access to advanced AI capabilities without prohibitive upfront costs or complex enterprise licensing. Subscription models tiered for startup budgets are needed.35

- Ease of Use for Non-Experts: Intuitive interfaces and workflows that allow bench scientists and researchers with limited AI/coding expertise to leverage agentic capabilities for tasks like literature analysis, hypothesis generation, and basic data processing.40 Low-code/no-code approaches for customization are highly desirable.107

- Seamless Integration: Built-in capabilities to connect easily with commonly used lab software (ELNs like Benchling, Labstep; LIMS), standard data formats (e.g., SMILES, SDF, potentially imaging/omics formats), public databases (PubMed, ChEMBL, PDB), and cloud storage solutions.39 Strong API support is essential.156

- Unified Data Management: Tools to overcome data silos, automate data ingestion, enforce standardization, ensure data quality, and prepare data for AI analysis, ideally within a unified environment.33

- Managed Infrastructure: Solutions that leverage cloud scalability but minimize the need for users to manage complex cloud infrastructure or HPC environments directly.36

- Targeted Agentic Functionality: Access to pre-built, validated AI agents focused on high-impact tasks common in early-stage research workflows (e.g., literature synthesis, target assessment support, experimental design suggestions).53

- Training and Support: Accessible documentation, tutorials, and responsive support tailored to users who may not be AI experts.147

The Opportunity:

The opportunity lies in creating an integrated, cloud-native platform that acts as an “AI co-pilot” or “intelligent lab assistant” specifically for small biotech teams. This platform would bundle essential data management, workflow automation, and agentic AI capabilities into an affordable, easy-to-use package.

Key strategic advantages for such a platform would include:

- Democratization of AI: Providing access to sophisticated agentic AI tools previously only available to large pharma or highly funded AI biotechs.

- Accelerating Innovation: Empowering resource-constrained teams to conduct research faster and more efficiently, potentially increasing the success rate of early-stage pipelines.

- Focus on Core Science: Allowing scientists to spend less time on data wrangling, literature searches, and administrative tasks, and more time on experimental work and strategic thinking.34

- Improved Collaboration: Facilitating better data sharing and workflow management within small teams and with external partners (CROs, academic collaborators).

- Scalability: Offering a solution that can grow with the startup, from initial discovery through preclinical stages, potentially leveraging cloud elasticity.

Success would depend on delivering tangible value in terms of time savings, cost reduction, or improved research outcomes, packaged in a way that directly addresses the operational realities and resource limitations of small biotech companies.

Potential Features and Functionalities for a Tailored AI Agents Platform

Based on the identified needs and opportunities, a cohesive AI agents platform tailored for small biotech research teams could offer the following features and functionalities:

- Core Platform Architecture:

- Cloud-Native Backend: Leveraging scalable and cost-effective cloud infrastructure (e.g., AWS, Google Cloud) to handle data storage and computation, minimizing on-premises requirements.190

- User-Friendly Web Interface: An intuitive dashboard designed for scientists, not just AI experts, providing access to data, workflows, and agent controls.59

- Agent Orchestration Engine: A system to manage and coordinate the execution of tasks by different AI agents (internal or potentially integrated external models/APIs).4

- Security & Compliance: Robust security measures (encryption, access controls) and features supporting compliance needs (e.g., audit trails, potential GxP alignment features).40

- Integration Capabilities:

- ELN/LIMS Connectors: Pre-built integrations or robust APIs to connect seamlessly with popular ELNs (e.g., Benchling, Labstep, SciNote) and LIMS, enabling bidirectional data flow.39

- Instrument Data Integration: Support for common instrument data formats (e.g., potentially via standards like JCAMP-DX, mzML, or vendor-specific APIs where available) and tools for automated data capture.39

- Public Database Connectors: Agents capable of querying and retrieving information from essential public resources like PubMed, ChEMBL, UniProt, PDB, ClinicalTrials.gov.4

- Cloud Storage Integration: Ability to read from and write to common cloud storage (e.g., S3, Google Cloud Storage).185

- Open API: A well-documented API allowing integration with other internal or third-party tools and custom scripts.156

- Data Management Features:

- Automated Data Ingestion & Parsing: Tools to automatically ingest data from connected sources and parse relevant information from various file formats (text, tables, potentially images).190

- Data Standardization & Curation: Features to help standardize terminology, units, and formats; tools for metadata tagging and annotation to ensure data is AI-ready and FAIR.33

- Unified Data Repository/Knowledge Graph: A centralized, searchable repository (potentially a lakehouse architecture 190) that integrates internal lab data with relevant external knowledge, breaking down silos.38

- Data Quality Monitoring: Automated checks or agent-driven analysis to flag potential data inconsistencies or quality issues.56

- AI Agent Functionalities (Pre-built & Customizable):

- Literature Research Agent: Takes natural language queries, searches PubMed/other sources, retrieves relevant papers, summarizes findings, extracts key entities (genes, proteins, diseases, compounds), and identifies relationships.1

- Hypothesis Generation Agent: Analyzes integrated internal and external data to suggest novel target-disease associations, potential drug repurposing opportunities, or new experimental avenues based on identified patterns.50